Shifu in OpenYurt Cluster

OpenYurt is a cloud-side computing platform, it converts existing Kubernetes clusters into OpenYurt clusters with OpenYurt, extends the capabilities of Kubernetes to the edge side. OpenYurt provides diverse features for collaborative development on the cloud-side, such as YurtTunnel to bridge cloud-side communication, Yurt-App-Manager for easy management of node-unit application deployment/operation and maintenance, and YurtHub to provide edge autonomy.

Developers can focus on application development on cloud-edge products without worrying about the operation and maintenance of the underlying architecture. As Kubernetes native open source IoT development framework, Shifu can be compatible with various IoT device protocols and abstract them into a microservice software object. The two complement each other very well. In particular, Shifu can abstract devices in a way that is native to OpenYurt, greatly improving the efficiency for IoT developers when we add YurtDeviceController to OpenYurt, .

With OpenYurt and Shifu, we can transform the complex IoT, cloud-side collaborative development into simple Web development.

Introduction

This article is a guide to use Shifu to integrate RTSP protocol cameras into OpenYurt clusters , which contains the basic operations of Shifu, Docker, Linux, Kubernetes, OpenYurt. Any developer can learn how to develop Shifu through this article.

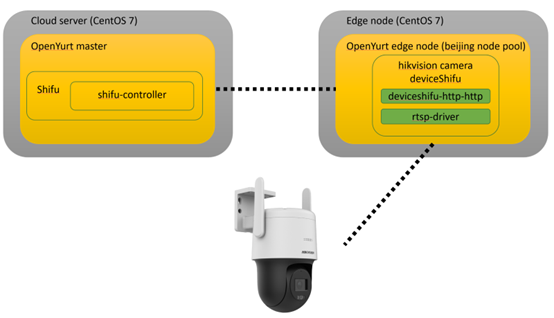

The Shifu architecture in this article is as follows

The northbound opens up the HTTP API interface via deviceshifu-http-http and the southbound interacts with the actual device via rtsp-driver.

Objectives

- deploy

OpenYurton Server side and Edge side viayurtctl, and add Edge side to Server side cluster - deploy the digital twin of webcam on Edge side

- realize remote automation control of webcam via HTTP

Required devices

- two virtual machines running

Linux, the configuration of Server and Edge should be 4 cores with 16G RAM and 2 cores with 8G RAM respectively - a webcam with

RTSPprotocol, the camera model used in this article is HikvisionDS-2DE3Q140CN-W.

Software environment

CentOS7.9.2009Gov1.17.1yurtctlv0.6.1kubectl: v1.19.8 (installed byyurtctl)

Step 1 Install and deploy the OpenYurt cluster

This article refers to the official tutorial for

OpenYurt

First let's download OpenYurt and clone the project directly from the official GitHub:

git clone https://github.com/openyurtio/openyurt.git

Next, let's download the v0.6.1 version of yurtctl:

curl -LO https://github.com/openyurtio/openyurt/releases/download/v0.6.1/yurtctl

chmod +x yurtctl

Server-side deployment

Create the OpenYurt cluster on the Server side:

./yurtctl init --apiserver-advertise-address <SERVER_IP> --openyurt-version latest --passwd 123

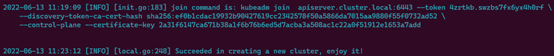

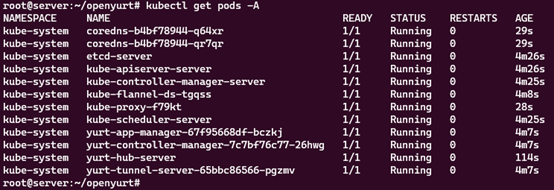

The cluster is successfully created when you see the following message, and use --token to mark that the Edge node is added to the cluster

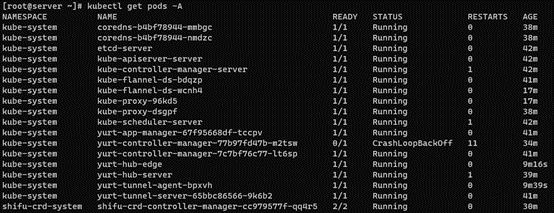

Next, take a look at the status of each Pod by running kubectl get pods -A:

problems

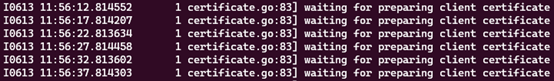

If you encounter in kubectl logs yurt-hub-server -n kube-system:

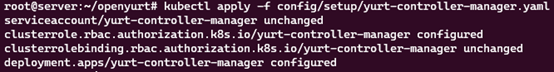

Please try kubectl apply -f config/setup/yurt-controller-manager.yaml (method from https://github.com/openyurtio/openyurt/issues/872#issuecomment- 1148167419 )

In addition, if you encounter the following output in kubectl logs yurt-hub-server -n kube-system.

Please try kubectl apply -f config/setup/ yurthub-cfg.yaml

If similar logs are encountered in yurt-tunnel-server and yurt-tunnel-agent, fix the RBAC issue in yurt-tunnel with the following command.

kubectl apply -f config/setup/yurt-tunnel-agent.yaml

kubectl apply -f config/setup/yurt-tunnel-server.yaml

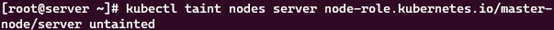

Use untaint master node to run Shifu controller.

kubectl taint nodes server node-role.kubernetes.io/master-

At this point, the Server side deployment is succeed.

Deploy the Edge side

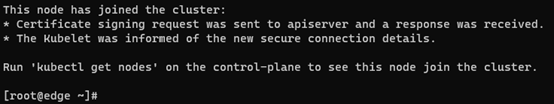

First, run with the token you just initialized on the Server side.

./yurtctl join <MASTER_IP>:6443 --token <MASTER_INIT_TOKEN> --node-type=edge --discovery-token-unsafe-skip-ca-verification --v=5

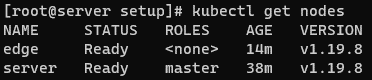

Verify Node status by kubectl get nodes.

At this point, a Server-side + an Edge-side cluster is created.

Step 2 Deploy Shifu in the cluster

Next, let's deploy Shifu to the OpenYurt cluster

On the Server side, clone the Shifu project locally.

git clone https://github.com/Edgenesis/shifu.git

cd shifu/

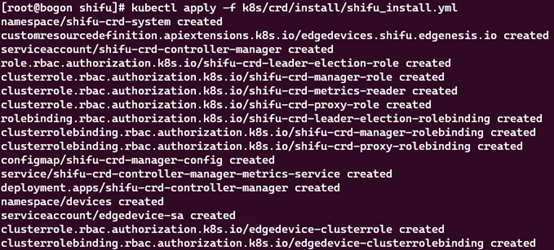

Next, install Shifu.

kubectl apply -f pkg/k8s/crd/install/shifu_install.yml

Check the Pod status with kubectl get pods -A.

Just see the Pod running in the shifu-crd-system namespace.

At this point, Shifu is successfully installed.

Step 3 Deploy the camera's digital twin deviceShifu

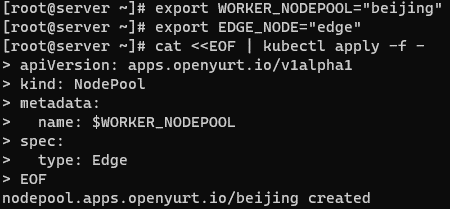

OpenYurt provides a very convenient node pool (NodePool) feature that allows us to manage clusters of nodes and deploy them.

To create a beijing node pool.

export WORKER_NODEPOOL="beijing"

export EDGE_NODE="edge"

cat <<EOF | kubectl apply -f -

apiVersion: apps.openyurt.io/v1alpha1

kind: NodePool

metadata:

name: $WORKER_NODEPOOL

spec:

type: Edge

EOF

The output is as follows.

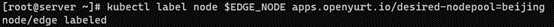

Next, take the Edge server label to beijing's NodePool.

kubectl label node $EDGE_NODE apps.openyurt.io/desired-nodepool=beijing

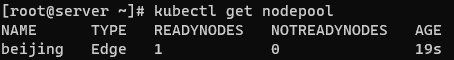

Check the status of NodePool, there should be a READYNODES.

kubectl get nodepool

Since IoT edge nodes are usually distributed within the same scenario, here you can use OpenYurt's UnitedDeployment feature to automate the deployment based on the NodePool .

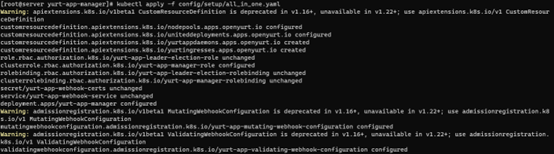

Install Yurt-app-manager:

git clone https://github.com/openyurtio/yurt-app-manager.git

cd yurt-app-manager

kubectl apply -f config/setup/all_in_one.yaml

Use UnitedDeployment to deploy a virtual Haikon camera with the following YAML file.

deviceshifu-camera-unitedDeployment.yaml

apiVersion: apps.openyurt.io/v1alpha1

kind: UnitedDeployment

metadata:

labels:

controller-tools.k8s.io: "1.0"

name: deviceshifu-hikvision-camera-deployment

spec:

selector:

matchLabels:

app: deviceshifu-hikvision-camera-deployment

workloadTemplate:

deploymentTemplate:

metadata:

labels:

app: deviceshifu-hikvision-camera-deployment

name: deviceshifu-hikvision-camera-deployment

namespace: default

spec:

selector:

matchLabels:

app: deviceshifu-hikvision-camera-deployment

template:

metadata:

labels:

app: deviceshifu-hikvision-camera-deployment

spec:

containers:

- image: edgehub/deviceshifu-http-http:v0.0.1

name: deviceshifu-http

ports:

- containerPort: 8080

volumeMounts:

- name: deviceshifu-config

mountPath: "/etc/edgedevice/config"

readOnly: true

env:

- name: EDGEDEVICE_NAME

value: "deviceshifu-hikvision-camera"

- name: EDGEDEVICE_NAMESPACE

value: "devices"

- image: edgenesis/camera-python:v0.0.1

name: camera-python

ports:

- containerPort: 11112

volumeMounts:

- name: deviceshifu-config

mountPath: "/etc/edgedevice/config"

readOnly: true

env:

- name: EDGEDEVICE_NAME

value: "deviceshifu-hikvision-camera"

- name: EDGEDEVICE_NAMESPACE

value: "devices"

- name: IP_CAMERA_ADDRESS

value: "<CAMERA_IP>"

- name: IP_CAMERA_USERNAME

value: "<CAMERA_USERNAME>"

- name: IP_CAMERA_PASSWORD

value: "<CAMERA_PASSWORD>"

- name: IP_CAMERA_CONTAINER_PORT

value: "11112"

- name: PYTHONUNBUFFERED

value: "1"

volumes:

- name: deviceshifu-config

configMap:

name: deviceshifu-hikvision-camera-configmap-0.0.1

serviceAccountName: edgedevice-sa

topology:

pools:

- name: beijing

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

replicas: 1

revisionHistoryLimit: 5

deviceshifu-camera-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: deviceshifu-hikvision-camera-deployment

name: deviceshifu-hikvision-camera

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: deviceshifu-hikvision-camera-deployment

type: LoadBalancer

deviceshifu-camera-unitedDeployment.yaml

apiVersion: shifu.edgenesis.io/v1alpha1

kind: EdgeDevice

metadata:

name: deviceshifu-hikvision-camera

namespace: devices

spec:

sku: "HikVision Camera"

connection: Ethernet

address: 0.0.0.0:11112

protocol: HTTP

deviceshifu-camera-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: deviceshifu-hikvision-camera-configmap-0.0.1

namespace: default

data:

driverProperties: |

driverSku: HikVision

driverImage: edgenesis/camera-python:v0.0.1

instructions: |

capture:

info:

stream:

move/up:

move/down:

move/left:

move/right:

telemetries: |

device_health:

properties:

instruction: info

initialDelayMs: 1000

intervalMs: 1000

Put the four files into a directory as follows.

camera-unitedDeployment/

├── camera-edgedevice.yaml

├── deviceshifu-camera-configmap.yaml

├─ deviceshifu-camera-service.yaml

└── deviceshifu-camera-unitedDeployment.yaml

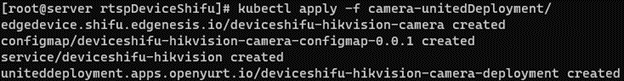

Next, deploy:

kubectl apply -f camera-unitedDeployment/

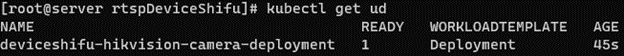

Check the UnitedDeployment status via kubectl get ud.

Confirm that Pod is deployed in the Edge server in beijing NodePool with kubectl get pods -owide.

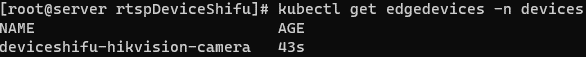

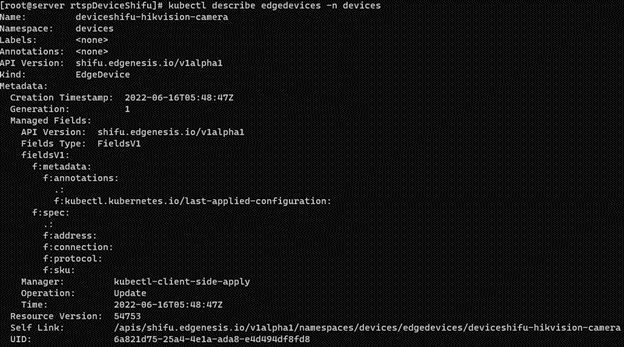

We can check Shifu's virtual devices in the cluster via kubectl get edgedevices -n devices at

Then use kubectl describe edgedevices -n devices to see the details of the devices such as configuration, status, etc.

At this point, the digital twin of the camera is deployed.

Running results

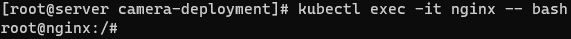

Next we control the camera, here we use a Pod of nginx to represent the application.

kubectl run nginx --image=nginx

When nginx is running, go to the nginx command line with kubectl exec -it nginx -- bash.

The camera can be controlled directly with the following command.

curl deviceshifu-hikvision-camera/move/{up/down/left/right}

If we want to see what the current photo and video stream, we need to proxy the camera's service locally via kubectl port-forward service/deviceshifu-hikvision-camera 30080:80 --address='0.0.0.0'.

You can view the image/video stream directly by entering the server's IP plus the port number in the browser at

<SERVER_IP>:30080/capture

<SERVER_IP>:30080/stream

Conclusion

In this article, we showed you how to deploy Shifu in an OpenYurt cluster to support RTSP camera.

In the future, we will also try to integrate Shifu with OpenYurt YurtDeviceController We will also try to extend the capabilities of OpenYurt to manage more IoT devices in a way that is native to OpenYurt.